Large Language Models (LLMs) excel at generating text but struggle with up-to-date knowledge and private data. They can produce outdated or incomplete responses since they rely on static training data.

Retrieval-augmented generation (RAG) addresses this by injecting relevant context into LLMs' inputs, thus minimizing hallucinations. This approach has gained significant traction in the AI industry, leading to various adaptations based on specific needs.

One such variation is GraphRAG, a more structured and hierarchical approach to RAG. From a technical perspective, it augments traditional vector-based retrieval by organizing text embeddings into a graph structure—where nodes represent entities or information units and edges define semantic or contextual relationships. This allows retrieval to be guided by both similarity and structure. In this post, we will discuss GraphRAG in detail and its implementation using ApertureDB.

Problems with Vanilla RAG

While RAG improves LLM responses by providing external context, traditional RAG systems, often dependent solely on vector search, have significant limitations.

- Connecting Disparate Information: When insights require multiple data points to be linked through shared attributes, vanilla RAG fails to synthesize meaningful conclusions.

- Understanding Summarized Concepts: It performs poorly when extracting overarching themes from large datasets or lengthy documents.

Vector search retrieves documents based on semantic similarity, meaning only information closely related in language gets pulled in. But what happens when relevant data isn’t semantically similar but contextually similar?

For example, a sales forecast for a company in the UK might depend on logistics disruptions in a distant trade route. A vanilla RAG system using vector search alone would likely miss this connection, as the key information isn’t phrased in a way that appears directly relevant. This narrow scope limits retrieval accuracy and, more importantly, relevance, especially for complex, interconnected data that rely on relationships beyond simple similarity, as is typical in real world use cases.

Let’s look at the foundation of GraphRAG, the Knowledge Graphs (KGs).

What are Knowledge Graphs?

A knowledge graph (KG) is a data model representing structured and unstructured information as a network of connected entities. Unlike traditional databases that enforce rigid schemas, KGs allow attributes within data points, and relationships between data points to evolve naturally.

The knowledge graph acts as a dynamic memory for LLMs in an RAG system, complementing their language capabilities with a structured context. Entities (such as people, products, or concepts) are stored as nodes with attributes, while relationships between them carry additional meaning. This structure scales from simple hierarchies to vast digital twins of organizations, encompassing millions of connections.

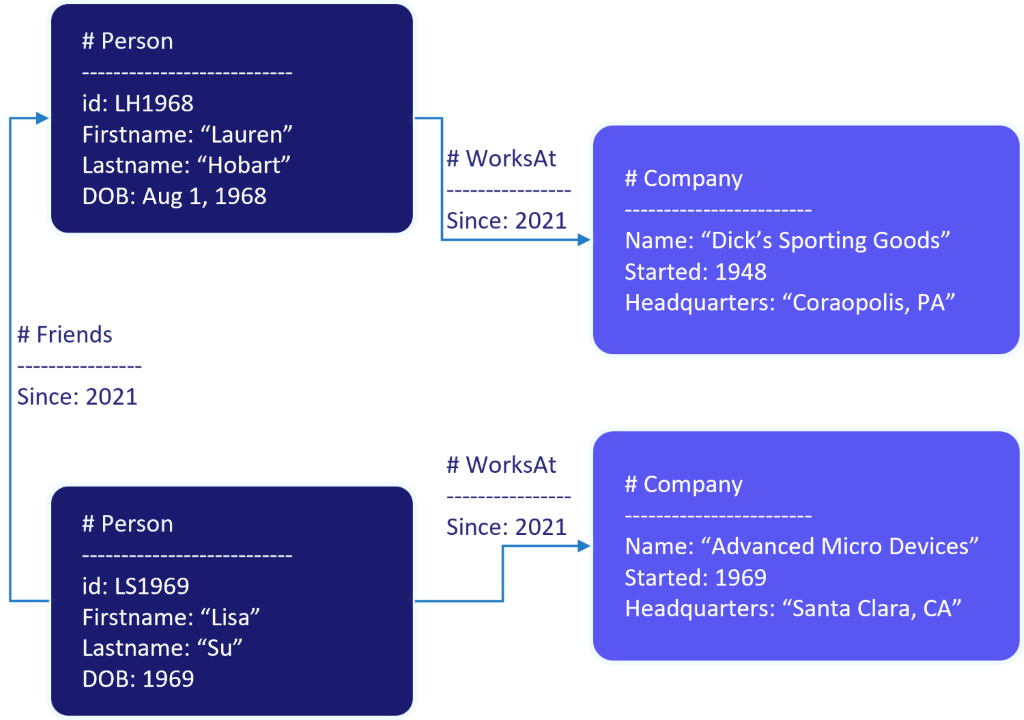

Basic Concepts on the Nodes and the Edges of KGs. Person and Company are Nodes while Connection between them are Edges | Source

Ontologies, taxonomies, graph databases and knowledge graphs are often discussed together, but they serve distinct roles. Ontologies capture representations in entities, attributes, their relationships, whereas taxonomies capture the hierarchical classification in categories. Graph databases store the relationships and data, with triple stores being a specific implementation often used for semantic data. Knowledge graphs integrate all these elements to provide contextual knowledge systems for advanced reasoning and querying.

Solutions with GraphRAG

GraphRAG is an advanced approach that enhances standard RAG systems by incorporating a knowledge graph. It connects information through structured relationships, enabling it to retrieve relevant details not explicitly mentioned in the initial search results, leading to more comprehensive and context-aware responses.

Advantages of GraphRAG

- More Comprehensive Retrieval: GraphRAG can connect related pieces of information beyond direct keyword or vector matches by navigating graph structures.

- Improved Relevance Filtering: Retrieved data can be ranked and filtered based on user context.

- Better Explainability: The structured relationships captured in a knowledge graph make tracing the reasoning behind generated responses easier.

Why Implement GraphRAG with ApertureDB?

ApertureDB uniquely combines graph and vector capabilities in a single system, making it versatile for GraphRAG solutions. Its ability to manage various modalities of data and represent them in a graph alongside application concepts offers a powerful solution allowing ApertureDB to integrate directly into the advanced retrieval process for structured and unstructured data. Neo4j, while powerful for graph data, may require integration with external vector databases for hybrid use cases. Other vector databases focus solely on semantic similarity. SQL-based systems can also model graph-like connections through foreign keys, but they often lack the flexibility and efficiency of a true graph-based approach.

Step-by-Step Guide on Creating a GraphRAG with ApertureDB

This guide will provide a step-by-step implementation of GraphRAG using ApertureDB and LangChain. By the end of the guide we will see how GraphRAG offers better responses as compared to vanilla RAG by incorporating contextual information. GraphRAG can be designed in different ways depending on the retrieval needs. Some approaches focus on structured searches, while others use embeddings or agent-based decision-making. Here are three common architectures:

- Dynamic Query Generation: A fine-tuned LLM, Text2Cypher, generates a Cypher (Dedicated query language used to interact with the Neo4j graph database) query from the user’s question, allowing precise graph traversal based on the schema. ApertureDB implements a different query language (ApertureQL) and this method can be extended to generate graph traversal queries for the graph stored in ApertureDB.

- Graph Embedding Retrievers: Instead of direct lookups, this method uses embeddings to represent node neighborhoods, enabling fuzzy topological searches for similar patterns.

- Agentic Traversal: Uses an LLM as an orchestrator, selecting and running different retrieval methods iteratively. The model determines which tools to use, sequences retrieval steps, and refines results dynamically.

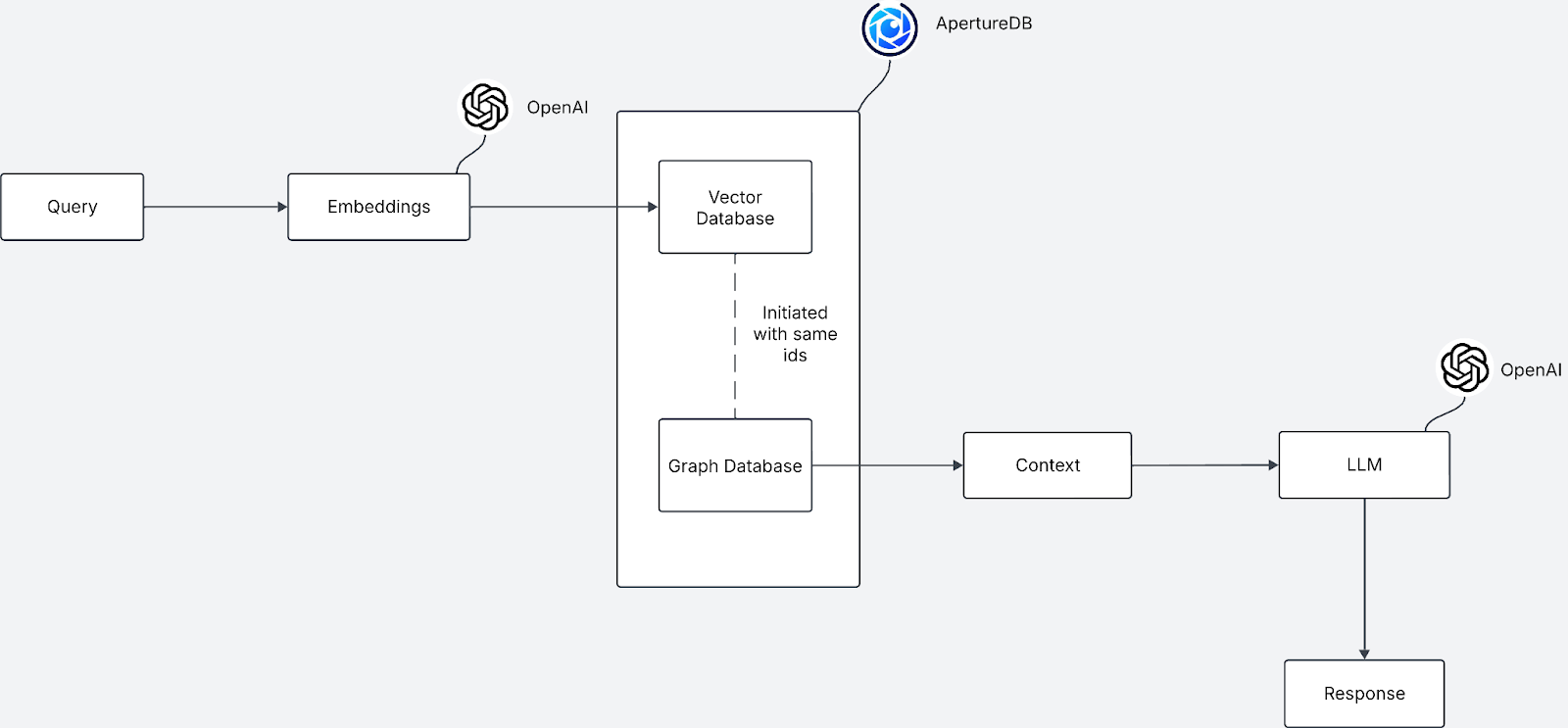

We’ll use an embedding-based approach with vector similarity search for this guide. The user’s question is embedded using OpenAI’s embedding model to generate embeddings for the graph. A vector search finds the most relevant chunks, and from those points, we traverse the graph to gather additional context.

Here’s a high-level breakdown of the GraphRAG architecture, module by module, based on the diagram:

- Query & Embeddings: A user submits a question, which is converted into vector embeddings using OpenAI’s embedding model.

- ApertureDB (Vector + Graph): The query embedding is matched against stored vectors in ApertureDB to retrieve relevant chunks. ApertureDB also holds graph relationships that link these chunks, providing a structured view of entities and connections.

- Context Assembly: Relevant text chunks and their associated graph-based metadata from ApertureDB are combined into a coherent context package.

- LLM: OpenAI’s large language model processes the assembled context to generate a grounded answer.

- Response: The final answer is returned to the user, incorporating both vector-based retrieval and graph insights.

We will start building in the next section, as we now have an overview of the architecture.

Problem Overview

As we discussed earlier, vector search retrieves information similar to the query. However, this can be problematic when the stored paragraphs or text are conceptually alike but phrased differently, making it difficult for the algorithm to recognize their similarity. This is the challenge we aim to address, and as we analyze the dataset, the issue will become clearer.

Implementation

We'll begin with the setup and installations steps captured in the Colab notebook in this Github repository.

1. Setup and Installations

In this section, we will set up the required dependencies and configure both OpenAI and ApertureDB. This setup ensures the necessary libraries are installed and both services properly authenticated before proceeding.These packages include:

langchainandlangchain-openaifor integrating with OpenAI models.langchain-experimentalfor additional experimental functionalities.aperturedbfor interacting with ApertureDB.openaifor direct API interactions with OpenAI models.

Once the packages are installed, import the required modules:

import os

import json

from google.colab import userdata

from langchain_openai import OpenAIEmbeddings

from langchain.schema import Document

from typing import List, Dict

from aperturedb.Utils import Utils

from langchain_community.vectorstores import ApertureDB

from aperturedb.CommonLibrary import create_connector

from pydantic import BaseModel

from openai import OpenAIAnother prerequisite is to set up ApertureDB. ApertureDB operates as a database server and supports remote access as long as the network allows client connections. To set it up:

- Sign up for an Aperture Cloud account (30-day free trial available).

- Configure your ApertureDB client in Google Colab (quickstart video):

After setting up the database, validate the connection by creating a client and checking the summary:

# Create the connector for ApertureDB

client = create_connector(host=<your cloud or community instance host name>, user=”admin”, password=<your DB password>)

utils = Utils(client)

utils.summary()With the setup complete, we can proceed to the next step: Dataset Preparation.

2. Dataset Preparation

The dataset is specifically designed to highlight the significance of GraphRAG. It includes predefined relationships to facilitate later traversals. In real-world scenarios, the corpus text is provided to the LLM, which extracts nodes and their relationships.

Here’s a snapshot of the dataset:

[

{

"Id": "1",

"content": "Example Corp, the US-based maker of the popular Widget personal gizmo, has recently extended its worldwide distribution channels by partnering with AnyCompany Logistics, an international shipping, storage, and last-mile distribution provider. Widget is an AI-augmented personal desktop pet whose conversational capabilities are powered by a new breed of generative AI technologies. Developed in the Austin-based Example Corp labs, the toys are manufactured in Taiwan.",

"class": "Article",

"publish_date": "2023-07-01",

"author": "Example Corp",

"relation": ["3"]

},

{

"Id": "2",

"content": "The UK's Christmas top-10 toy predictions are already in, even though it's only August, with industry analysts predicting huge demand for Example Corp's Widget chatty desktop pet. Retailers in London, Manchester, and other major cities have already placed orders for over 1 million units, to the value of $15 million, and those numbers are only set to increase in the months leading up to Christmas.",

"class": "Article",

"publish_date": "2023-08-01",

"author": "Toy Industry Analyst",

"relation": ["4"]

},

……

]3. Ingestion

This section covers the ingestion process for both the Graph and Vector Databases. We will prepare and store data efficiently, enabling fast retrieval and relationships between entities.

Vector Database Ingestion

To store documents as embeddings in ApertureDB, we first convert raw data into a format compatible with LangChain:

embeddings = OpenAIEmbeddings()

def convert_to_langchain_docs(data: List[Dict]) -> List[Document]:

"""Converts raw data into LangChain Document objects."""

documents = []

for item in data:

metadata = {

"title": item.get("title"),

"author": item.get("author"),

"source": item.get("source"),

"published_at": item.get("published_at"),

"category": item.get("category"),

"url": item.get("url"),

}

doc = Document(page_content=item.get("body", ""), metadata=metadata)

documents.append(doc)

return documents

# Convert dataset into documents

documents = convert_to_langchain_docs(data)

# Store in ApertureDB as vector embeddings

vector_db = ApertureDB.from_documents(documents, embeddings)- Initialize OpenAIEmbeddings: This allows us to generate vector embeddings from textual data.

- Convert Raw Data to LangChain Documents: The function

convert_to_langchain_docs()extracts metadata and content from raw data to create structured Document objects. - Store the Documents in ApertureDB: Using

ApertureDB.from_documents(), the processed documents are converted into vector embeddings and stored in ApertureDB.

Graph Database Ingestion

To store articles in ApertureDB as a graph, we define a function to insert articles (class = “Article") and create relationships (class = “related_article”):

def add_articles_to_db(client, articles):

"""Adds articles as entities and establishes relationships in ApertureDB."""

query = []

# Add each article as an entity.

for article in articles:

query.append({

"AddEntity": {

"_ref": int(article["Id"][0]),

"class": "Article",

"properties": {

"Id": int(article["Id"][0]),

"content": article["content"],

"publish_date": article["publish_date"],

"author": article["author"]

},

"if_not_found": {

"Id": ["==", int(article["Id"][0])]

}

}

})

# Create connections based on relationships.

for article in articles:

for dst in article.get("relation", []):

query.append({

"AddConnection": {

"class": "related_article",

"src": int(article["Id"][0]),

"dst": int(dst),

"properties": {"connected_to": int(dst)}

}

})

# Execute the query and print the response.

response, blobs = client.query(query)

client.print_last_response()

return response, blobs- Create Entities: Each article is added as an entity in the database using

AddEntity. It contains properties such as ID, content, publication date, and author. - Establish Relationships: The function loops through each article's relation field and creates connections between related articles using

AddConnection. - Execute the Query: The queries are sent to ApertureDB, and the response is printed to confirm success.

4. Retrieval

Retrieving relevant information efficiently is a key part of using a vector database for knowledge-based applications. This section demonstrates how to retrieve documents using a vector-based retriever and expands the search by following relationships in the graph database.

Initial Retrieval from Vector Database

To begin, we use the vector_db.as_retriever() method to fetch relevant documents based on a given query:

retriever = vector_db.as_retriever()

context = retriever.get_relevant_documents(query)This retrieves the most relevant documents based on the vector similarity search.

Multi-Hop Retrieval Using Graph Connections

Once we retrieve an initial document ID from the vector database, we use a graph traversal method to retrieve additional contextually linked documents.

def retrieve_with_multi_hop(client, query):

"""

Retrieves an article ID from the vector database and fetches all connected articles using multi-hop retrieval.

Args:

client: The API client used to execute queries.

query: The search query for initial document retrieval.

Returns:

A list of relevant article contents.

"""

# Step 1: Retrieve the most relevant document from the vector database

retrieved_docs = retriever.get_relevant_documents(query)

if not retrieved_docs:

return []

# Extract the first retrieved document's ID

start_id = retrieved_docs[0].metadata.get("Id")

# Step 2: Use multi-hop traversal to fetch related articles

return multi_hop_article_content(client, start_id)Multi-Hop Article Retrieval Function

The function multi_hop_article_content performs a breadth-first traversal over the graph database, starting from the given article ID. It follows the connected_to relationships to gather all relevant content.

def multi_hop_article_content(client, start_id):

"""

Given a starting article id, fetches its content and then hops along the

'connected_to' chain until no further connection is found.

Args:

client: The API client used to execute queries.

start_id: The starting Article Id.

Returns:

A list of article content strings gathered from the hop chain.

"""

context = [] # List to hold the content of each Article

visited = set() # To avoid loops

current_id = start_id

while current_id is not None and current_id not in visited:

visited.add(current_id)

# --- Step 1: Fetch the Article content ---

article_query = [{

"FindEntity": {

"with_class": "Article",

"_ref": 1,

"constraints": {

"Id": ["==", current_id]

},

"results": {

"list": ["Id", "content"]

}

}

}]

response, blobs = client.query(article_query)

article_result = response[0]["FindEntity"]

if article_result["returned"] == 0:

break # No article found; exit loop.

article = article_result["entities"][0]

content = article.get("content", "")

context.append(content)

# --- Step 2: Find the next connection ---

connection_query = [{

"FindEntity": {

"with_class": "Article",

"_ref": 1,

"constraints": {

"Id": ["==", current_id]

}

}

},{

"FindConnection": {

"with_class": "related_article",

"src": 1,

"results": {

"list": ["connected_to"],

}

}

}]

response, blobs = client.query(connection_query)

connection_result = response[1]["FindConnection"]

connections = connection_result.get("connections", [])

# Look for the first non-null 'connected_to' value.

next_id = None

for conn in connections:

if conn.get("connected_to") is not None:

next_id = conn["connected_to"]

break

# If there is no valid next connection, end the loop.

current_id = next_id

return contextThe retrieval process follows a hybrid approach, using both vector and graph databases:

- Vector Search: The vector database retrieves documents based on semantic similarity to the input query.

- Graph Traversal: The retrieved document's ID fetches related documents via explicit relationships stored in the graph database.

- Multi-Hop Expansion: The traversal continues along the

connected_tochain until all relevant documents are gathered.

This approach ensures that we retrieve semantically relevant documents and explore related articles based on their structured relationships.

5. LLM

Once we have retrieved the relevant documents, we pass the gathered context to a Large Language Model (LLM) to generate a response based on the user query.

def query_with_context(context, user_query, model="gpt-4o"):

"""

Uses the gathered context and a user query to generate a response using OpenAI's API.

Args:

context (list of str): List of article contents from the multi-hop process.

user_query (str): The query to ask the assistant.

model (str): The model to use for chat completions.

Returns:

The response message from the assistant.

"""

client = OpenAI()

# Construct the messages for the chat completion.

messages = [

{"role": "developer", "content": "You are a helpful assistant."},

{"role": "system", "content": f"Here is some context gathered from our articles:\n\n{context}"},

{"role": "user", "content": user_query}

]

completion = client.chat.completions.create(

model=model,

messages=messages

)

return completion.choices[0].message6. Results

From the results, we can see a clear difference between a simple RAG and the GraphRAG.

query_with_context(context, "What are the sales prospects for Example Corp in the UK?", model="gpt-4o")Here’s the response from a Vanilla RAG setup:

The sales prospects for Example Corp in the UK look quite promising. According to industry analysts, there is a predicted huge demand for Example Corp's Widget chatty desktop pet. Retailers in major UK cities like London and Manchester have already placed significant orders for over 1 million units, valued at $15 million. These numbers are expected to increase as the Christmas season approaches, indicating strong sales prospects for Example Corp in this market.Here’s the response from the GraphRAG:

The sales prospects for Example Corp in the UK may currently face challenges due to supply chain disruptions. While Example Corp recently partnered with AnyCompany Logistics to expand its worldwide distribution channels and reduce shipping times from Taiwan to the UK from 2 weeks to just 3 days, these plans have been affected by blockages and delays in the Fictitious Canal. The canal is experiencing landslips, which have trapped container vessels and disrupted supply chains. As a result, goods destined for Europe, including Example Corp's products, could face significant delays, potentially threatening inventory levels for UK retailers, especially during the critical holiday season. This disruption could impact sales negatively in the short term if Example Corp is unable to meet demand due to these shipping issues.GraphRAG delivers better results by incorporating more context. In RAG, it's often said that generation is only as good as retrieval. GraphRAG picks relevant context by hopping through the space of related articles and providing contextually similar articles to the LLM for better response generation.

Conclusion

Vanilla RAG struggles with maintaining context and handling complex relationships, leading to shallow responses. GraphRAG is a solid choice for complex relationships within data, as it structures its data in the form of knowledge graphs.

ApertureDB further enhances this by natively processing structured and unstructured data, including images and videos, eliminating the need for separate databases. It seamlessly integrates vector search and graph capabilities in one system.

Currently, some of the functionality is handled through Langchain, while others are handled through the ApertureDB API. In the future, the ApertureData team will work on storing and querying graphs in ApertureDB using Langchain. This will make it even simpler to integrate graphs with RAG applications.

Current implementation assumes that the data is always neat-and-clean in terms of structure, but that’s not always true in the real-world. In later blogs, we will plan to create knowledge graphs just from the unstructured text using LLMs and ingest it into ApertureDB.

.png)

.png)

.jpeg)

.png)